|

|

Post by AudioHTIT on Sept 8, 2021 0:01:34 GMT -5

Should the newer firmware had these fixes? But still the variable of firmware. We would think so, but we also know that the RMC-1 tested very different between two FWs (pre/post 1.9), and we know that fixing one thing can break (or change) another. So to really put this to rest, I think we need to see current measurements of the XMC-2 and RMC-1 on 2.3. That could tell us the RMC & XMC are the same, but it might also tell us the RMC-1 isn’t the same as it used to be. I’m not expecting this, it’s just possible. |

|

|

|

Post by audiosyndrome on Sept 8, 2021 8:02:15 GMT -5

I've have avoided this conversation due to the potential of just what it turned into, that being a technical pi**ing match. Worth pointing out many engineers do not put much value in SINAD measurements. SINAD is ONE of many measurements in each review and is primarily used for a quantitative ranking. The bulk of the remaining measurements are a clear insight into the potential performance of the unit under test (if one understands the measurements). As an engineer (retired) I would challenge your comment “MANY engineers do not put much value in SINAD measurements”. Otherwise good post. 👍 Russ |

|

KeithL

Administrator

Posts: 10,261

|

Post by KeithL on Sept 8, 2021 11:45:01 GMT -5

As I understand it SINAD is a relatively widely used metric - FOR RADIO TRANSMITTERS. However I don't know of any major audio magazine or audio review site that uses it.

While it is just another way of expressing THD+N, S/N, and THD, it cannot be directly compared to any of those metrics commonly used by other audio reviewers.

(Which makes it sort of like those special screws, that only fit that special screwdriver, than you can only buy from that one company, who nobody seems to ever have in stock.)

I've have avoided this conversation due to the potential of just what it turned into, that being a technical pi**ing match. Worth pointing out many engineers do not put much value in SINAD measurements. SINAD is ONE of many measurements in each review and is primarily used for a quantitative ranking. The bulk of the remaining measurements are a clear insight into the potential performance of the unit under test (if one understands the measurements). As an engineer (retired) I would challenge your comment “MANY engineers do not put much value in SINAD measurements”. Otherwise good post. 👍 Russ |

|

KeithL

Administrator

Posts: 10,261

|

Post by KeithL on Sept 8, 2021 11:59:08 GMT -5

The problem is that the overall "art" is no longer so simple that it can be reduced to a couple of measurements (if that has even been the case).

For example, while I go to several expensive restaurants, I have never asked any of them for a printout of the purity of the water in my glass at the table. I guess it might be interesting... and I might infer something about their general care in preparing things based on the results... but it wouldn't tell me all that much about the food...

I'm also pretty sure that, even though a Rembrandt painting would probably look quite interesting under a microscope, few art lovers have even taken that step.

I suppose it's possible that a two-channel DAC with a THD of 0.001% might be audibly better than one with a THD of 0.002% - although I very much doubt it. I'm not prepared to get into a discussion about exactly how much THD is audible - but I'm pretty sure it would take a lot more than that.

However neither of those two-channel DACs is going to compare favorably to a good quality surround sound processor with ten times that much THD - at least not if you want to listen to an Atmos movie on it.

I agree that, among audiophiles, there are far sillier and less sensible obsessions... but...

Audible or not, objective measurements provide a relative indication as to the "state of the art". Most people will disregard poor relative measurements because they are invested in their purchases (they paid good money for it). Measurements, as long as they are technically accurate with repeatability and re-reproducibility are valuable to the average consumer as a indicator of the attention to detail that the designer has taken to create a product. Many of us do not have the luxury of doing comparative listening of multiple suppliers of a piece of equipment due to a lack of businesses that will, or can accommodate that. Focusing on measurements is a minor obsession in a hobby that has participants fretting about interconnects, power conditioners, power cords, equipment isolation, absorption, diffusion.... Bottom line is if you want pure 2 channel performance, a Pre-pro is not your best choice (unless of course you like it) |

|

|

|

Post by donh50 on Sept 8, 2021 12:25:08 GMT -5

SINAD in dB is equivalent to THD+N in dB in most any audio (or RF) product review. SINAD is a major part of the IEEE Standards for ADC and DAC testing (at any frequency) so not some unusual or little-known spec; it is very widely used.

For music and such the IHF and earlier standards set the bar at 1% (-40 dB). There have been many experiments through the years. The last ones I subjected various friends and Internet denizens to aligned well with that metric. For a pure sine wave, 0.1% (-60 dB) was undetectable by almost everyone, and 1% (-40 dB) was detected by most everyone, in DBTs. Using two pure tones and IMD drove the threshold down a little, but use multiple tones or musical content and 5% or more was hard to detect.

For me SINAD (THD+N) is a starting point; if it is good, chances are everything else is "OK" and inaudible. From there my first look is at the spectra of the noise and distortion, and so forth. SINAD dominated by noise may be less obtrusive than the same SINAD value that is dominated by a single or few tones like power supply noise. I usually hate analogies, but horsepower is a reasonable metric for a car, but certainly does not tell the whole story. I want to know HP, but above a certain threshold it is not a major factor in purchase. I tend to glance at the specs then look at features and other things I am interested in, whether it's a car or an AVP.

As for the water, I don't ask for a lab report (though I do for our home well), but will notice if one place has consistently better water than another. All hail Zeolite...

YMMV - Don

|

|

|

|

Post by rbk123 on Sept 8, 2021 13:12:49 GMT -5

I agree that, among audiophiles, there are far sillier and less sensible obsessions... but...

I think the only real obsession now is just why the RMC-1 and XMC-2 measured differently for 2 channels. Amir can't confirm any longer side by side, since he doesn't have the RMC-1 and probably shipped the XMC-2 as well. The only option left is Lonnie to hook up an RMC and see if he gets -85 to match his earlier measurement or a different amount like Amir's. If that doesn't happen, then conjecture on each side of the argument will just continue to spin until people get tired. |

|

|

|

Post by dwander on Sept 8, 2021 13:28:19 GMT -5

Purity of water? Art under a microscope? One of the reasons I returned my XMC-2 was due to the issues I was dealing with. And they may not have even been deal breakers for me at the time, but I started to get concerned about the issues I didnt know about. I wondered what else might be wrong with the unit that wasnt easy to discover that may or may not be a serious issue. Also, a few run ins with customer service were not great experiences. I understood that I wasnt going to get an answer to my question and issues right then, but I wasnt even getting an inkling that my issues were important and that anything would happen after the phone call ended. So, I decided what was best for me was to go to another product. Since then I understand new firmware updates have helped solidify the functionality of the units and that's really great. Nonetheless, in my opinion, comparisons like in the post above are just so evasive and dismissive of customer's concerns.

|

|

|

|

Post by monkumonku on Sept 8, 2021 13:30:32 GMT -5

I agree that, among audiophiles, there are far sillier and less sensible obsessions... but...

I think the only real obsession now is just why the RMC-1 and XMC-2 measured differently for 2 channels. Amir can't confirm any longer side by side, since he doesn't have the RMC-1 and probably shipped the XMC-2 as well. The only option left is Lonnie to hook up an RMC and see if he gets -85 to match his earlier measurement or a different amount like Amir's. If that doesn't happen, then conjecture on each side of the argument will just continue to spin until people get tired. I know I'm tired of it! When it comes down to it, I'll take what Lonnie has to say. |

|

Chris

Emo VIPs

Posts: 424

|

Post by Chris on Sept 8, 2021 13:38:29 GMT -5

As I understand it SINAD is a relatively widely used metric - FOR RADIO TRANSMITTERS. However I don't know of any major audio magazine or audio review site that uses it. While it is just another way of expressing THD+N, S/N, and THD, it cannot be directly compared to any of those metrics commonly used by other audio reviewers.

(Which makes it sort of like those special screws, that only fit that special screwdriver, than you can only buy from that one company, who nobody seems to ever have in stock.)

As an engineer (retired) I would challenge your comment “MANY engineers do not put much value in SINAD measurements”. Otherwise good post. 👍 Russ My understanding is you can directly convert "THD+N" into SINAD with a simple Excel expression "=20*LOG10(C3/100)". For example, "0.005" THD+N would be "86.02059991" SINAD. Many reviews with measurements publish the THD+N figure so this allows for the translation and thus comparison to other devices. |

|

Lsc

Emo VIPs

Posts: 3,434

|

Post by Lsc on Sept 8, 2021 13:50:26 GMT -5

I agree that, among audiophiles, there are far sillier and less sensible obsessions... but...

I think the only real obsession now is just why the RMC-1 and XMC-2 measured differently for 2 channels. Amir can't confirm any longer side by side, since he doesn't have the RMC-1 and probably shipped the XMC-2 as well. The only option left is Lonnie to hook up an RMC and see if he gets -85 to match his earlier measurement or a different amount like Amir's. If that doesn't happen, then conjecture on each side of the argument will just continue to spin until people get tired. Exactly. The only reason for not doing so is because the numbers are actually different. The funny thing is that if the numbers were different and Emotiva published them beforehand then you would have had folks like myself spending a little more and getting the RMC1L- now this is assuming that the RMC1 and RMC1L are equals. Something that was once an obvious thing but not so much anymore. I’d like to see all 3 on the test bench for L/R and then a second measurement for center & a surround channel. |

|

cawgijoe

Emo VIPs    "When you come to a fork in the road, take it." - Yogi Berra

"When you come to a fork in the road, take it." - Yogi Berra

Posts: 5,033

|

Post by cawgijoe on Sept 8, 2021 13:58:56 GMT -5

I think the only real obsession now is just why the RMC-1 and XMC-2 measured differently for 2 channels. Amir can't confirm any longer side by side, since he doesn't have the RMC-1 and probably shipped the XMC-2 as well. The only option left is Lonnie to hook up an RMC and see if he gets -85 to match his earlier measurement or a different amount like Amir's. If that doesn't happen, then conjecture on each side of the argument will just continue to spin until people get tired. Exactly. The only reason for not doing so is because the numbers are actually different. The funny thing is that if the numbers were different and Emotiva published them beforehand then you would have had folks like myself spending a little more and getting the RMC1L- now this is assuming that the RMC1 and RMC1L are equals. Something that was once an obvious thing but not so much anymore. I’d like to see all 3 on the test bench for LCR and then a second measurement for all channels. I find this all very strange especially since all three units have essentially the same architecture (correct me if I'm wrong), and also use the same firmware. Probably a good idea to put three units at Emotiva on the bench to test. |

|

|

|

Post by derwin on Sept 8, 2021 14:06:06 GMT -5

Exactly. The only reason for not doing so is because the numbers are actually different. The funny thing is that if the numbers were different and Emotiva published them beforehand then you would have had folks like myself spending a little more and getting the RMC1L- now this is assuming that the RMC1 and RMC1L are equals. Something that was once an obvious thing but not so much anymore. I’d like to see all 3 on the test bench for LCR and then a second measurement for all channels. I find this all very strange especially since all three units have essentially the same architecture (correct me if I'm wrong), and also use the same firmware. Probably a good idea to put three units at Emotiva on the bench to test. Hard to not think Emotiva is up to something fishy until they stop dodging this ☹️ I spent a lot of money here to get what I thought was a great product with a few SW bugs…. Hard not to wonder whether I spent a lot of money for a mediocre product with a lot of bugs.. |

|

|

|

Post by donh50 on Sept 8, 2021 14:09:20 GMT -5

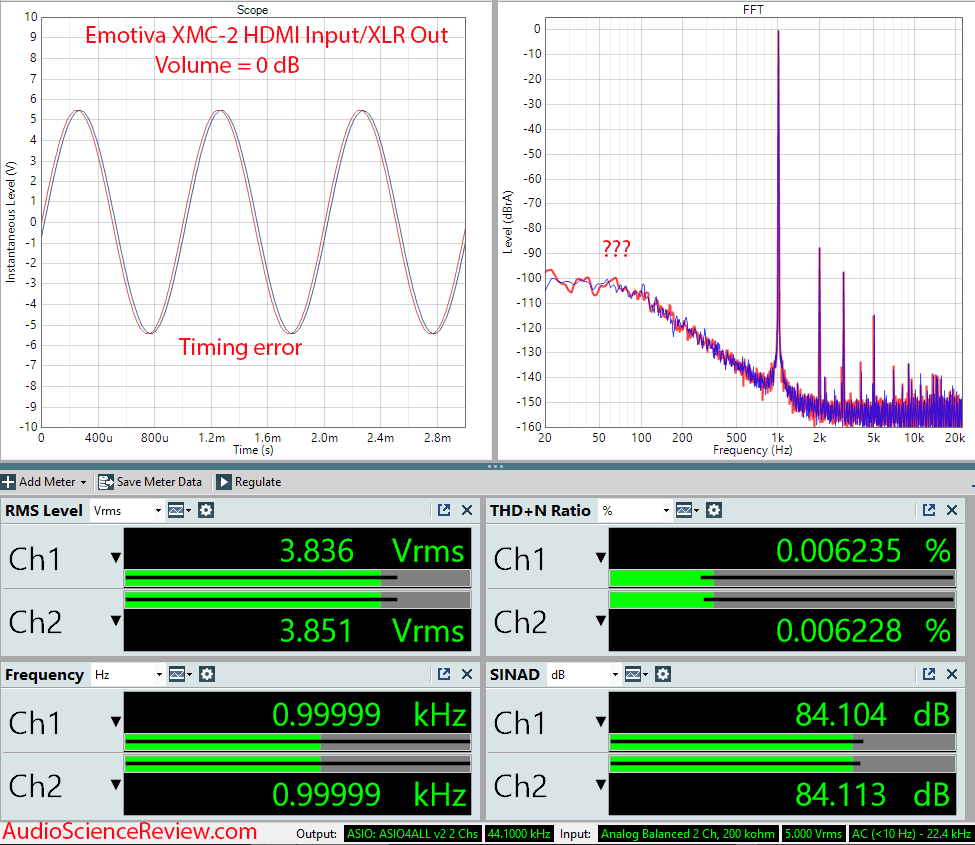

Exactly. The only reason for not doing so is because the numbers are actually different. The funny thing is that if the numbers were different and Emotiva published them beforehand then you would have had folks like myself spending a little more and getting the RMC1L- now this is assuming that the RMC1 and RMC1L are equals. Something that was once an obvious thing but not so much anymore. I’d like to see all 3 on the test bench for LCR and then a second measurement for all channels. I find this all very strange especially since all three units have essentially the same architecture (correct me if I'm wrong), and also use the same firmware. Probably a good idea to put three units at Emotiva on the bench to test. The RMC-1 review used earlier FW, 1.9 I think, and from other readers' responses the bass management bug was introduced at 2.1 or 2.2. The XMC-2 was tested using 2.3 so has the bass bug and that may account for the rising LF noise floor. I do not know if the strange phase shift and poorer distortion performance are also FW-related. Hope so as that is an easier fix than replacing HW. |

|

|

|

Post by Ex_Vintage on Sept 8, 2021 14:55:19 GMT -5

A firmware testing norm is "continuous regression". Never stop testing. (unless your coverage is 100%, and even with excellent test automation, that is impossible because of the endless permutations of interactive features)

|

|

|

|

Post by garbulky on Sept 8, 2021 15:05:15 GMT -5

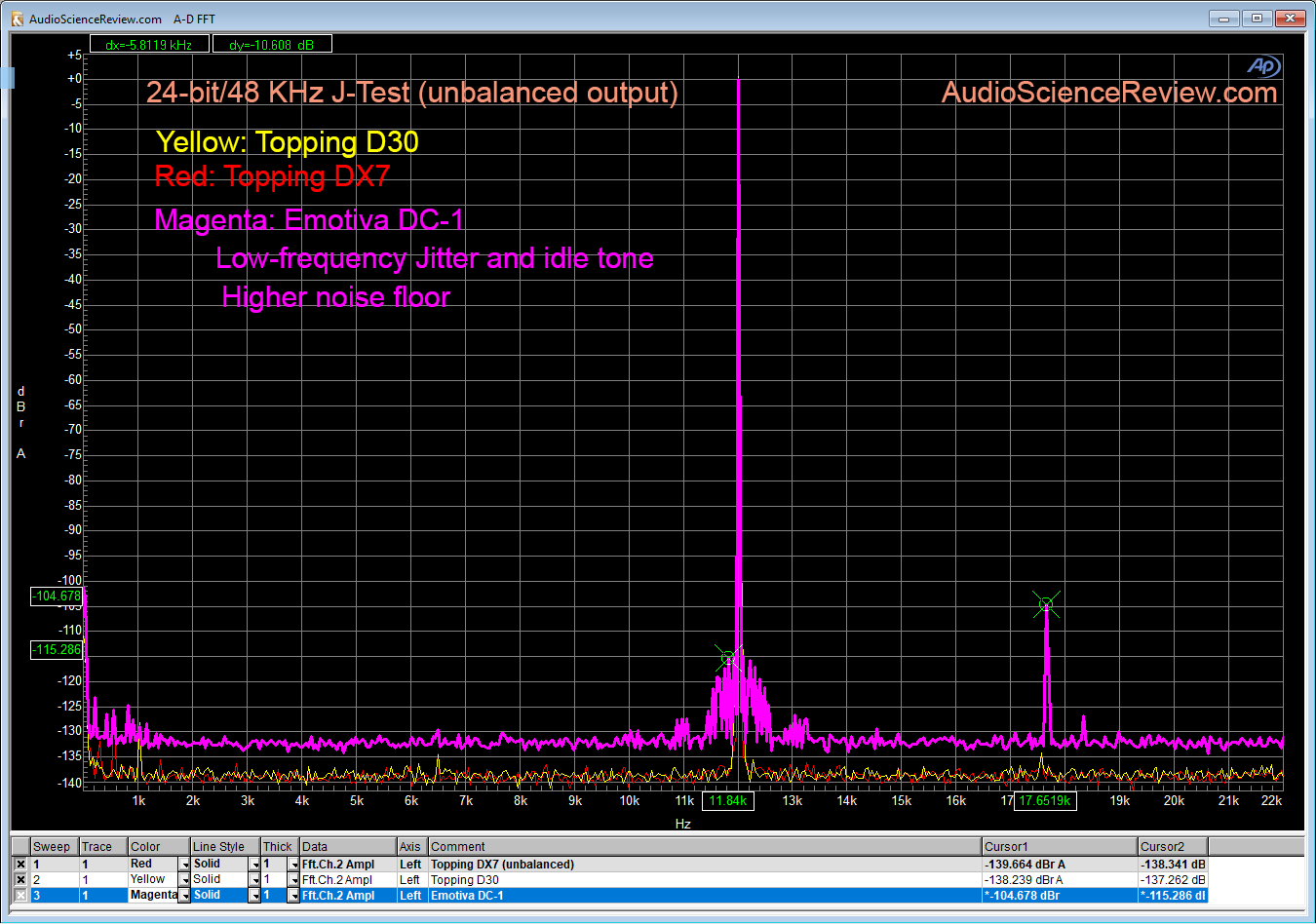

I just don't understand how you guys can go backwards. It's not like you can't do well measuring devices (see the DC-1).  vs the xmc-2  |

|

tparm

Minor Hero

Posts: 16

|

Post by tparm on Sept 8, 2021 15:22:37 GMT -5

I find this all very strange especially since all three units have essentially the same architecture (correct me if I'm wrong), and also use the same firmware. Probably a good idea to put three units at Emotiva on the bench to test. Hard to not think Emotiva is up to something fishy until they stop dodging this ☹️ I spent a lot of money here to get what I thought was a great product with a few SW bugs…. Hard not to wonder whether I spent a lot of money for a mediocre product with a lot of bugs.. I'd be happy with Emotiva just putting an (one) RMC or XMC on their bench and publishing the results. |

|

|

|

Post by louron on Sept 8, 2021 16:41:23 GMT -5

As I understand it SINAD is a relatively widely used metric - FOR RADIO TRANSMITTERS. However I don't know of any major audio magazine or audio review site that uses it.

While it is just another way of expressing THD+N, S/N, and THD, it cannot be directly compared to any of those metrics commonly used by other audio reviewers.

(Which makes it sort of like those special screws, that only fit that special screwdriver, than you can only buy from that one company, who nobody seems to ever have in stock.)

As an engineer (retired) I would challenge your comment “MANY engineers do not put much value in SINAD measurements”. Otherwise good post. 👍 Russ Here we go again! It makes me sad that we are slowly trying to shift the discussion and to try to say that SINAD isn’t that important. That is what is unacceptable as a reaction to a simple question. Customers have bought XMC-2 based on Emotiva representation that it was about just as good as the RMC-1 if you only consider the L,R and C. Many of us have taken into account the ASR measurements of the RMC-1 and the representation from Emotiva to make our buying decision and thus expected to see similar results. Period. If SINAD is that different chances are performances are too. Seems pretty obvious. Is an attempt to denigrate that specific spec (SINAD) an admission that the XMC-2 has a 85db SINAD and the RMC-1 around 100 dB SINAD. Is that the case? That is the question? Is it or not? Or is the difference dut to other facts like measuring 8 channels instead of 2. That should be quite simple. Very simple transparency. |

|

cawgijoe

Emo VIPs    "When you come to a fork in the road, take it." - Yogi Berra

"When you come to a fork in the road, take it." - Yogi Berra

Posts: 5,033

|

Post by cawgijoe on Sept 8, 2021 17:30:05 GMT -5

I find this all very strange especially since all three units have essentially the same architecture (correct me if I'm wrong), and also use the same firmware. Probably a good idea to put three units at Emotiva on the bench to test. Hard to not think Emotiva is up to something fishy until they stop dodging this ☹️ I spent a lot of money here to get what I thought was a great product with a few SW bugs…. Hard not to wonder whether I spent a lot of money for a mediocre product with a lot of bugs.. I highly doubt they are up to anything fishy. I have no regrets trading my XMC-1 in on the 2. Where are all the bugs you are talking about? |

|

|

|

Post by adaboy on Sept 8, 2021 17:44:30 GMT -5

Hard to not think Emotiva is up to something fishy until they stop dodging this ☹️ I spent a lot of money here to get what I thought was a great product with a few SW bugs…. Hard not to wonder whether I spent a lot of money for a mediocre product with a lot of bugs.. I highly doubt they are up to anything fishy. I have no regrets trading my XMC-1 in on the 2. Where are all the bugs you are talking about? The past and current bugs are here RMC-1/RMC-1L/XMC-2 Owners Thread (Post Firmware v1.10) |

|

|

|

Post by louron on Sept 8, 2021 18:41:42 GMT -5

Hard to not think Emotiva is up to something fishy until they stop dodging this ☹️ I spent a lot of money here to get what I thought was a great product with a few SW bugs…. Hard not to wonder whether I spent a lot of money for a mediocre product with a lot of bugs.. I highly doubt they are up to anything fishy. I have no regrets trading my XMC-1 in on the 2. Where are all the bugs you are talking about? Seriously…. As a XMC-2 I can send you a list. Like many, I was living with them, being frustrated at time but thinking I had a processor measuring close to the top….. now it is highly doubtful. It I still trust Emotive to figure out a way to address the situation. Measure and communicate frankly. And if needed take the right actions for their loyal customers. No more denial without proofs and measurements and no denigrating of SINAD as a spec. |

|